2021iii24, Wednesday: Does it add up?

The slipperiness of statistics, and why us advocates need to learn to love numbers. Plus: wise words from the US on design.

Short thought: I have a problem with numbers: I like them.

Don’t get me wrong. I’m not a mathematician. My formal maths education stopped at A-level, decades ago, and has only restarted recently as I’ve sought to help my daughter with her GCSE maths studies through lockdown.

But numbers don’t scare me, and there’s an ethereal beauty to maths which always appeals.

And that’s the problem. I sometimes find it hard to understand just how daunting maths - and particularly statistics, perhaps - can be to many people. To be clear: that’s a failure of empathy on my part, not any failing on theirs.

Why “particularly statistics”? Because, I think, they can often defeat common sense. And while Darryll Huff’s seminal book “How to lie with statistics” overdoes it (Huff later became a key smokescreen for Big Tobacco, unfortunately), the fact remains that using stats to obfuscate instead of illuminate is an old and well-used trick because it works.

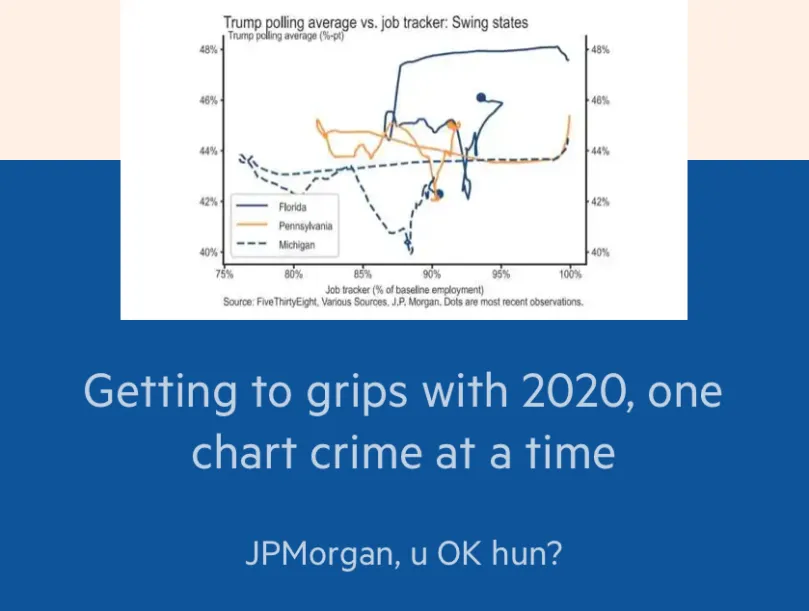

(Chart crime is a subset of lying with statistics, or perhaps an overlapping circle on a Venn diagram. Because often chart crime arises from negligence, not malice. FT Alphaville’s Axes of Evil series, from which the above illustration is drawn, is an excellent set of examples.)

A great illustration of the “common sense is wrong” problem is highlighted in a piece by a Conservative MP, Anthony Browne. (I don’t usually link to pieces by Tory MPs on ConservativeHome. But this, despite the clickbait headline about government policy, is really good.) Anthony says his constituents are up in arms because their kids are getting sent home from school on positive LFD Covid tests, and kept away even when they have a negative PCR test thereafter. Surely the PCR tests are gold standard? This can’t be right.

Well, yes it can, says Anthony. And he’s spot on. The issue arises because of the counter-intuitive way that false positives (getting a yes when it should be a no) and false negatives (the other way round) interact with large populations with a relatively low incidence of what you’re testing for.

Put simply (and I’m relying on Anthony for the false positive/false negative rates, although I’ve run the calculation myself):

- Imagine a million kids, and 0.5% of them - 1 in 200, or 5,000 - have the Bug.

- A positive LFD test is almost always right (only 0.03% false positives - only a tiny fraction of people told they have the Bug will prove not to have it), but a negative test is much more unreliable (49.9% false negatives - in other words, if you’ve got the Bug there’s a 50/50 chance the test will say you haven’t).

- A positive PCR test is basically always right. But 5.2% of people with the Bug will get a negative PCR result nonetheless.

- Of the million kids (remember: about 995,000 are fine, about 5,000 have the Bug), the LFD will flag 2,500 of the kids with the Bug. (Yes, the other 2,500 won’t get flagged. But that’s a different problem…) It’ll also flag about 300 kids who are clean. Oops.

- So 2,800 kids get sent home, along with their close contacts. Assume all 2,800 then have a PCR test.

- The zero-false-positive thing means all 300 of the mistakes will get picked up. Yay! Back to school for them and their classmates?

- Er… no. Here’s the problem. That 1-in-20 false negative rate means that about 125 or so of the 2,500 kids who DO have the bug will get a negative result as well.

- So of the 425-odd kids whose PCR looks like they should be allowed back into school, almost a third of them are actually Bugged.

This, says Anthony, is why the government is right to disallow immediate return after a negative PCR. And I see his point. The stats are right, if utterly counter-intuitive.

What’s this got to do with advocacy? Well, so much of our work involves numbers. In crime, it’s DNA tests. In personal injury, it’s causation for some kinds of illness and injury. In commercial matters, we spend our lives poring over company accounts and arguing over experts who tell us what’s likely and what’s not. And an awareness of Bayesian reasoning can be a huge help when assessing whose story stacks up.

And if we don’t speak numbers, how can we possibly ensure our clients’ cases are properly put?

This point isn’t new, and the profession knows it. Working with the Royal Statistical Society, a couple of years ago it put together a guide for advocates on statistics and probability. It’s brilliant. Download it, and keep it as a ready reference. And - as I'm trying to do - find ways of illustrating probability that are transparent to people for whom this just isn't straightforward, or that take into account the times when statistics boggle the common-sense mind.

One final word on Anthony’s piece, though. He rightly points out that these numbers change as the incidence drops. The false-negative rate in the above example, for instance, falls to less than 10% once the incidence of the Bug is down to 1 in 1,000.

But his overall point - that government policy is backed up by the numbers - has one big hole, it seems to me. As we noted, the false negative rate for LFDs is 50%. So even on our example, that's 2,500 kids WITH the Bug who are in school, in the honest but mistaken belief that they’re no risk to anyone.

In other words, the reliance on LFDs for school testing is a false comfort - a form of pandemic theatre (akin to the security theatre that made air travel such a pain before it was wiped out by the Bug). And compared to that, quibbling over the 125 kids to whom the PCR has wrongly given the all-clear seems a bit pointless.

(An invitation: I like numbers, but I’m not a statistician. If I’ve got any of the above wrong - particularly the final bit about the 2.5k kids innocently swanning around leaking Bug everywhere - let me know and I’ll correct myself.)

Someone is right on the internet: As a follow-up on the font conversation on Monday, I’ve always been a fan of style guides. Not the ghastly prescriptive grammatical guides (Strunk and White, I’m looking at you); I mean the guides some publications craft to help their writers keep things consistent. Good examples come from the Guardian and the Economist.

These, of course, deal with words themselves, not the typography in which they appear. But a good friend (thanks, Ian) points to a guide published by the Securities and Exchange Commission in the US. It’s aimed at people creating investor notifications, for instance about listings, and spends a lot of time suggesting clear language (and is really good on that). But there’s also a chapter (chapter 7) dealing with design, which says wise and interesting things about fonts. Worth a look.

It also makes some worthwhile and entirely true points about layout: for instance, that a ragged right-hand margin is far more readable than a justified one. I’d love to adopt that one in my legal drafting. However, I suspect that if I hand in a Particulars of Claim, or a skeleton argument, with a ragged margin, I’m likely to get into even more trouble than I will by continuing to use Garamond. Baby steps…